diff --git a/README.md b/README.md

new file mode 100644

index 0000000..160ccf9

--- /dev/null

+++ b/README.md

@@ -0,0 +1,198 @@

+

+

Grad-SVC based Grad-TTS from HUAWEI Noah's Ark Lab

+

+This project is named as Grad-SVC, or GVC for short. Its core technology is diffusion, but so different from other diffusion based SVC models. Codes are adapted from Grad-TTS and so-vits-svc-5.0. So the features from so-vits-svc-5.0 will be used in this project.

+

+The project will be completed in the coming months ~~~

+

+

+## Setup Environment

+1. Install project dependencies

+

+ ```shell

+ pip install -r requirements.txt

+ ```

+

+2. Download the Timbre Encoder: [Speaker-Encoder by @mueller91](https://drive.google.com/drive/folders/15oeBYf6Qn1edONkVLXe82MzdIi3O_9m3), put `best_model.pth.tar` into `speaker_pretrain/`.

+

+3. Download [hubert_soft model](https://github.com/bshall/hubert/releases/tag/v0.1),put `hubert-soft-0d54a1f4.pt` into `hubert_pretrain/`.

+

+4. Download pretrained [nsf_bigvgan_pretrain_32K.pth](https://github.com/PlayVoice/NSF-BigVGAN/releases/augment), and put it into `bigvgan_pretrain/`.

+

+5. Download pretrain model [gvc.pretrain.pth](), and put it into `grad_pretrain/`.

+ ```shell

+ python gvc_inference.py --config configs/base.yaml --model ./grad_pretrain/gvc.pretrain.pth --spk ./configs/singers/singer0001.npy --wave test.wav

+ ```

+

+## Dataset preparation

+Put the dataset into the `data_raw` directory following the structure below.

+```

+data_raw

+├───speaker0

+│ ├───000001.wav

+│ ├───...

+│ └───000xxx.wav

+└───speaker1

+ ├───000001.wav

+ ├───...

+ └───000xxx.wav

+```

+

+## Data preprocessing

+After preprocessing you will get an output with following structure.

+```

+data_gvc/

+└── waves-16k

+│ └── speaker0

+│ │ ├── 000001.wav

+│ │ └── 000xxx.wav

+│ └── speaker1

+│ ├── 000001.wav

+│ └── 000xxx.wav

+└── waves-32k

+│ └── speaker0

+│ │ ├── 000001.wav

+│ │ └── 000xxx.wav

+│ └── speaker1

+│ ├── 000001.wav

+│ └── 000xxx.wav

+└── mel

+│ └── speaker0

+│ │ ├── 000001.mel.pt

+│ │ └── 000xxx.mel.pt

+│ └── speaker1

+│ ├── 000001.mel.pt

+│ └── 000xxx.mel.pt

+└── pitch

+│ └── speaker0

+│ │ ├── 000001.pit.npy

+│ │ └── 000xxx.pit.npy

+│ └── speaker1

+│ ├── 000001.pit.npy

+│ └── 000xxx.pit.npy

+└── hubert

+│ └── speaker0

+│ │ ├── 000001.vec.npy

+│ │ └── 000xxx.vec.npy

+│ └── speaker1

+│ ├── 000001.vec.npy

+│ └── 000xxx.vec.npy

+└── speaker

+│ └── speaker0

+│ │ ├── 000001.spk.npy

+│ │ └── 000xxx.spk.npy

+│ └── speaker1

+│ ├── 000001.spk.npy

+│ └── 000xxx.spk.npy

+└── singer

+ ├── speaker0.spk.npy

+ └── speaker1.spk.npy

+```

+

+1. Re-sampling

+ - Generate audio with a sampling rate of 16000Hz in `./data_gvc/waves-16k`

+ ```

+ python prepare/preprocess_a.py -w ./data_raw -o ./data_gvc/waves-16k -s 16000

+ ```

+

+ - Generate audio with a sampling rate of 32000Hz in `./data_gvc/waves-32k`

+ ```

+ python prepare/preprocess_a.py -w ./data_raw -o ./data_gvc/waves-32k -s 32000

+ ```

+2. Use 16K audio to extract pitch

+ ```

+ python prepare/preprocess_f0.py -w data_gvc/waves-16k/ -p data_gvc/pitch

+ ```

+3. use 32k audio to extract mel

+ ```

+ python prepare/preprocess_spec.py -w data_gvc/waves-32k/ -s data_gvc/mel

+ ```

+4. Use 16K audio to extract hubert

+ ```

+ python prepare/preprocess_hubert.py -w data_gvc/waves-16k/ -v data_gvc/hubert

+ ```

+5. Use 16k audio to extract timbre code

+ ```

+ python prepare/preprocess_speaker.py data_gvc/waves-16k/ data_gvc/speaker

+ ```

+6. Extract the average value of the timbre code for inference

+ ```

+ python prepare/preprocess_speaker_ave.py data_gvc/speaker/ data_gvc/singer

+ ```

+8. Use 32k audio to generate training index

+ ```

+ python prepare/preprocess_train.py

+ ```

+9. Training file debugging

+ ```

+ python prepare/preprocess_zzz.py

+ ```

+

+## Train

+1. Start training

+ ```

+ python gvc_trainer.py

+ ```

+2. Resume training

+ ```

+ python gvc_trainer.py -p logs/grad_svc/grad_svc_***.pth

+ ```

+3. Log visualization

+ ```

+ tensorboard --logdir logs/

+ ```

+

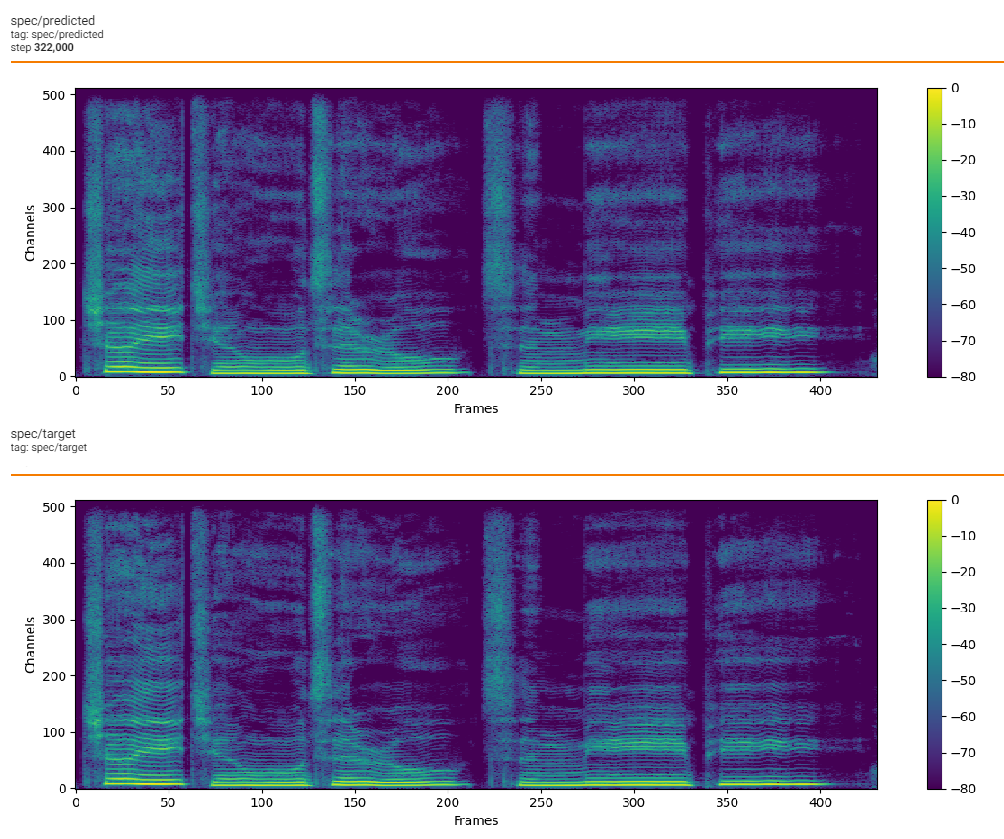

+## Loss

+

+

+

+

+## Inference

+

+1. Export inference model

+ ```

+ python gvc_export.py --checkpoint_path logs/grad_svc/grad_svc_***.pt

+ ```

+

+2. Inference

+ - if there is no need to adjust `f0`, just run the following command.

+ ```

+ python gvc_inference.py --model gvc.pth --spk ./data_gvc/singer/your_singer.spk.npy --wave test.wav --shift 0

+ ```

+ - if `f0` will be adjusted manually, follow the steps:

+

+ 1. use hubert to extract content vector

+ ```

+ python hubert/inference.py -w test.wav -v test.vec.npy

+ ```

+ 2. extract the F0 parameter to the csv text format

+ ```

+ python pitch/inference.py -w test.wav -p test.csv

+ ```

+ 3. final inference

+ ```

+ python gvc_inference.py --model gvc.pth --spk ./data_gvc/singer/your_singer.spk.npy --wave test.wav --vec test.vec.npy --pit test.csv --shift 0

+ ```

+

+3. Convert mel to wave

+ ```

+ python gvc_inference_wave.py --mel gvc_out.mel.pt --pit gvc_tmp.pit.csv

+ ```

+

+## Code sources and references

+

+https://github.com/huawei-noah/Speech-Backbones/blob/main/Grad-TTS

+

+https://github.com/facebookresearch/speech-resynthesis [paper](https://arxiv.org/abs/2104.00355)

+

+https://github.com/jaywalnut310/vits [paper](https://arxiv.org/abs/2106.06103)

+

+https://github.com/NVIDIA/BigVGAN [paper](https://arxiv.org/abs/2206.04658)

+

+https://github.com/mindslab-ai/univnet [paper](https://arxiv.org/abs/2106.07889)

+

+https://github.com/mozilla/TTS

+

+https://github.com/bshall/soft-vc

+

+https://github.com/maxrmorrison/torchcrepe

diff --git a/assets/grad_svc_loss.jpg b/assets/grad_svc_loss.jpg

new file mode 100644

index 0000000..ceea76c

Binary files /dev/null and b/assets/grad_svc_loss.jpg differ

diff --git a/assets/grad_svc_mel.jpg b/assets/grad_svc_mel.jpg

new file mode 100644

index 0000000..476a9a6

Binary files /dev/null and b/assets/grad_svc_mel.jpg differ

diff --git a/bigvgan/LICENSE b/bigvgan/LICENSE

new file mode 100644

index 0000000..328ed6e

--- /dev/null

+++ b/bigvgan/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2022 PlayVoice

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/bigvgan/README.md b/bigvgan/README.md

new file mode 100644

index 0000000..7816c1e

--- /dev/null

+++ b/bigvgan/README.md

@@ -0,0 +1,138 @@

+

+

Neural Source-Filter BigVGAN

+ Just For Fun

+

+

+

+

+## Dataset preparation

+

+Put the dataset into the data_raw directory according to the following file structure

+```shell

+data_raw

+├───speaker0

+│ ├───000001.wav

+│ ├───...

+│ └───000xxx.wav

+└───speaker1

+ ├───000001.wav

+ ├───...

+ └───000xxx.wav

+```

+

+## Install dependencies

+

+- 1 software dependency

+

+ > pip install -r requirements.txt

+

+- 2 download [release](https://github.com/PlayVoice/NSF-BigVGAN/releases/tag/debug) model, and test

+

+ > python nsf_bigvgan_inference.py --config configs/nsf_bigvgan.yaml --model nsf_bigvgan_g.pth --wave test.wav

+

+## Data preprocessing

+

+- 1, re-sampling: 32kHz

+

+ > python prepare/preprocess_a.py -w ./data_raw -o ./data_bigvgan/waves-32k

+

+- 3, extract pitch

+

+ > python prepare/preprocess_f0.py -w data_bigvgan/waves-32k/ -p data_bigvgan/pitch

+

+- 4, extract mel: [100, length]

+

+ > python prepare/preprocess_spec.py -w data_bigvgan/waves-32k/ -s data_bigvgan/mel

+

+- 5, generate training index

+

+ > python prepare/preprocess_train.py

+

+```shell

+data_bigvgan/

+│

+└── waves-32k

+│ └── speaker0

+│ │ ├── 000001.wav

+│ │ └── 000xxx.wav

+│ └── speaker1

+│ ├── 000001.wav

+│ └── 000xxx.wav

+└── pitch

+│ └── speaker0

+│ │ ├── 000001.pit.npy

+│ │ └── 000xxx.pit.npy

+│ └── speaker1

+│ ├── 000001.pit.npy

+│ └── 000xxx.pit.npy

+└── mel

+ └── speaker0

+ │ ├── 000001.mel.pt

+ │ └── 000xxx.mel.pt

+ └── speaker1

+ ├── 000001.mel.pt

+ └── 000xxx.mel.pt

+

+```

+

+## Train

+

+- 1, start training

+

+ > python nsf_bigvgan_trainer.py -c configs/nsf_bigvgan.yaml -n nsf_bigvgan

+

+- 2, resume training

+

+ > python nsf_bigvgan_trainer.py -c configs/nsf_bigvgan.yaml -n nsf_bigvgan -p chkpt/nsf_bigvgan/***.pth

+

+- 3, view log

+

+ > tensorboard --logdir logs/

+

+

+## Inference

+

+- 1, export inference model

+

+ > python nsf_bigvgan_export.py --config configs/maxgan.yaml --checkpoint_path chkpt/nsf_bigvgan/***.pt

+

+- 2, extract mel

+

+ > python spec/inference.py -w test.wav -m test.mel.pt

+

+- 3, extract F0

+

+ > python pitch/inference.py -w test.wav -p test.csv

+

+- 4, infer

+

+ > python nsf_bigvgan_inference.py --config configs/nsf_bigvgan.yaml --model nsf_bigvgan_g.pth --wave test.wav

+

+ or

+

+ > python nsf_bigvgan_inference.py --config configs/nsf_bigvgan.yaml --model nsf_bigvgan_g.pth --mel test.mel.pt --pit test.csv

+

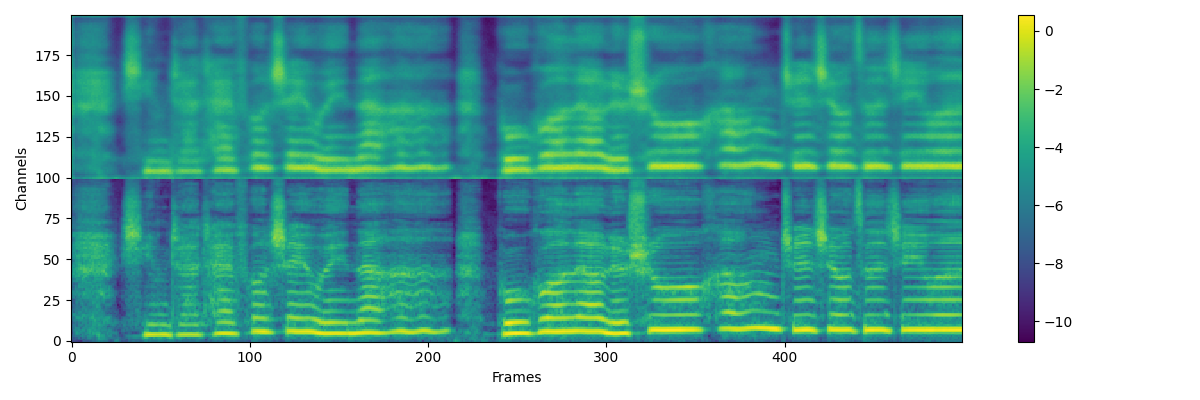

+## Augmentation of mel

+For the over smooth output of acoustic model, we use gaussian blur for mel when train vocoder

+```

+# gaussian blur

+model_b = get_gaussian_kernel(kernel_size=5, sigma=2, channels=1).to(device)

+# mel blur

+mel_b = mel[:, None, :, :]

+mel_b = model_b(mel_b)

+mel_b = torch.squeeze(mel_b, 1)

+mel_r = torch.rand(1).to(device) * 0.5

+mel_b = (1 - mel_r) * mel_b + mel_r * mel

+# generator

+optim_g.zero_grad()

+fake_audio = model_g(mel_b, pit)

+```

+

+

+## Source of code and References

+

+https://github.com/nii-yamagishilab/project-NN-Pytorch-scripts/tree/master/project/01-nsf

+

+https://github.com/mindslab-ai/univnet [[paper]](https://arxiv.org/abs/2106.07889)

+

+https://github.com/NVIDIA/BigVGAN [[paper]](https://arxiv.org/abs/2206.04658)

\ No newline at end of file

diff --git a/bigvgan/configs/nsf_bigvgan.yaml b/bigvgan/configs/nsf_bigvgan.yaml

new file mode 100644

index 0000000..809b129

--- /dev/null

+++ b/bigvgan/configs/nsf_bigvgan.yaml

@@ -0,0 +1,60 @@

+data:

+ train_file: 'files/train.txt'

+ val_file: 'files/valid.txt'

+#############################

+train:

+ num_workers: 4

+ batch_size: 8

+ optimizer: 'adam'

+ seed: 1234

+ adam:

+ lr: 0.0002

+ beta1: 0.8

+ beta2: 0.99

+ mel_lamb: 5

+ stft_lamb: 2.5

+ pretrain: ''

+ lora: False

+#############################

+audio:

+ n_mel_channels: 100

+ segment_length: 12800 # Should be multiple of 320

+ filter_length: 1024

+ hop_length: 320 # WARNING: this can't be changed.

+ win_length: 1024

+ sampling_rate: 32000

+ mel_fmin: 40.0

+ mel_fmax: 16000.0

+#############################

+gen:

+ mel_channels: 100

+ upsample_rates: [5,4,2,2,2,2]

+ upsample_kernel_sizes: [15,8,4,4,4,4]

+ upsample_initial_channel: 320

+ resblock_kernel_sizes: [3,7,11]

+ resblock_dilation_sizes: [[1,3,5], [1,3,5], [1,3,5]]

+#############################

+mpd:

+ periods: [2,3,5,7,11]

+ kernel_size: 5

+ stride: 3

+ use_spectral_norm: False

+ lReLU_slope: 0.2

+#############################

+mrd:

+ resolutions: "[(1024, 120, 600), (2048, 240, 1200), (4096, 480, 2400), (512, 50, 240)]" # (filter_length, hop_length, win_length)

+ use_spectral_norm: False

+ lReLU_slope: 0.2

+#############################

+dist_config:

+ dist_backend: "nccl"

+ dist_url: "tcp://localhost:54321"

+ world_size: 1

+#############################

+log:

+ info_interval: 100

+ eval_interval: 1000

+ save_interval: 10000

+ num_audio: 6

+ pth_dir: 'chkpt'

+ log_dir: 'logs'

diff --git a/bigvgan/model/__init__.py b/bigvgan/model/__init__.py

new file mode 100644

index 0000000..986a0cf

--- /dev/null

+++ b/bigvgan/model/__init__.py

@@ -0,0 +1 @@

+from .alias.act import SnakeAlias

\ No newline at end of file

diff --git a/bigvgan/model/alias/__init__.py b/bigvgan/model/alias/__init__.py

new file mode 100644

index 0000000..a2318b6

--- /dev/null

+++ b/bigvgan/model/alias/__init__.py

@@ -0,0 +1,6 @@

+# Adapted from https://github.com/junjun3518/alias-free-torch under the Apache License 2.0

+# LICENSE is in incl_licenses directory.

+

+from .filter import *

+from .resample import *

+from .act import *

\ No newline at end of file

diff --git a/bigvgan/model/alias/act.py b/bigvgan/model/alias/act.py

new file mode 100644

index 0000000..308344f

--- /dev/null

+++ b/bigvgan/model/alias/act.py

@@ -0,0 +1,129 @@

+# Adapted from https://github.com/junjun3518/alias-free-torch under the Apache License 2.0

+# LICENSE is in incl_licenses directory.

+

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+

+from torch import sin, pow

+from torch.nn import Parameter

+from .resample import UpSample1d, DownSample1d

+

+

+class Activation1d(nn.Module):

+ def __init__(self,

+ activation,

+ up_ratio: int = 2,

+ down_ratio: int = 2,

+ up_kernel_size: int = 12,

+ down_kernel_size: int = 12):

+ super().__init__()

+ self.up_ratio = up_ratio

+ self.down_ratio = down_ratio

+ self.act = activation

+ self.upsample = UpSample1d(up_ratio, up_kernel_size)

+ self.downsample = DownSample1d(down_ratio, down_kernel_size)

+

+ # x: [B,C,T]

+ def forward(self, x):

+ x = self.upsample(x)

+ x = self.act(x)

+ x = self.downsample(x)

+

+ return x

+

+

+class SnakeBeta(nn.Module):

+ '''

+ A modified Snake function which uses separate parameters for the magnitude of the periodic components

+ Shape:

+ - Input: (B, C, T)

+ - Output: (B, C, T), same shape as the input

+ Parameters:

+ - alpha - trainable parameter that controls frequency

+ - beta - trainable parameter that controls magnitude

+ References:

+ - This activation function is a modified version based on this paper by Liu Ziyin, Tilman Hartwig, Masahito Ueda:

+ https://arxiv.org/abs/2006.08195

+ Examples:

+ >>> a1 = snakebeta(256)

+ >>> x = torch.randn(256)

+ >>> x = a1(x)

+ '''

+

+ def __init__(self, in_features, alpha=1.0, alpha_trainable=True, alpha_logscale=False):

+ '''

+ Initialization.

+ INPUT:

+ - in_features: shape of the input

+ - alpha - trainable parameter that controls frequency

+ - beta - trainable parameter that controls magnitude

+ alpha is initialized to 1 by default, higher values = higher-frequency.

+ beta is initialized to 1 by default, higher values = higher-magnitude.

+ alpha will be trained along with the rest of your model.

+ '''

+ super(SnakeBeta, self).__init__()

+ self.in_features = in_features

+ # initialize alpha

+ self.alpha_logscale = alpha_logscale

+ if self.alpha_logscale: # log scale alphas initialized to zeros

+ self.alpha = Parameter(torch.zeros(in_features) * alpha)

+ self.beta = Parameter(torch.zeros(in_features) * alpha)

+ else: # linear scale alphas initialized to ones

+ self.alpha = Parameter(torch.ones(in_features) * alpha)

+ self.beta = Parameter(torch.ones(in_features) * alpha)

+ self.alpha.requires_grad = alpha_trainable

+ self.beta.requires_grad = alpha_trainable

+ self.no_div_by_zero = 0.000000001

+

+ def forward(self, x):

+ '''

+ Forward pass of the function.

+ Applies the function to the input elementwise.

+ SnakeBeta = x + 1/b * sin^2 (xa)

+ '''

+ alpha = self.alpha.unsqueeze(

+ 0).unsqueeze(-1) # line up with x to [B, C, T]

+ beta = self.beta.unsqueeze(0).unsqueeze(-1)

+ if self.alpha_logscale:

+ alpha = torch.exp(alpha)

+ beta = torch.exp(beta)

+ x = x + (1.0 / (beta + self.no_div_by_zero)) * pow(sin(x * alpha), 2)

+ return x

+

+

+class Mish(nn.Module):

+ """

+ Mish activation function is proposed in "Mish: A Self

+ Regularized Non-Monotonic Neural Activation Function"

+ paper, https://arxiv.org/abs/1908.08681.

+ """

+

+ def __init__(self):

+ super().__init__()

+

+ def forward(self, x):

+ return x * torch.tanh(F.softplus(x))

+

+

+class SnakeAlias(nn.Module):

+ def __init__(self,

+ channels,

+ up_ratio: int = 2,

+ down_ratio: int = 2,

+ up_kernel_size: int = 12,

+ down_kernel_size: int = 12):

+ super().__init__()

+ self.up_ratio = up_ratio

+ self.down_ratio = down_ratio

+ self.act = SnakeBeta(channels, alpha_logscale=True)

+ self.upsample = UpSample1d(up_ratio, up_kernel_size)

+ self.downsample = DownSample1d(down_ratio, down_kernel_size)

+

+ # x: [B,C,T]

+ def forward(self, x):

+ x = self.upsample(x)

+ x = self.act(x)

+ x = self.downsample(x)

+

+ return x

\ No newline at end of file

diff --git a/bigvgan/model/alias/filter.py b/bigvgan/model/alias/filter.py

new file mode 100644

index 0000000..7ad6ea8

--- /dev/null

+++ b/bigvgan/model/alias/filter.py

@@ -0,0 +1,95 @@

+# Adapted from https://github.com/junjun3518/alias-free-torch under the Apache License 2.0

+# LICENSE is in incl_licenses directory.

+

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+import math

+

+if 'sinc' in dir(torch):

+ sinc = torch.sinc

+else:

+ # This code is adopted from adefossez's julius.core.sinc under the MIT License

+ # https://adefossez.github.io/julius/julius/core.html

+ # LICENSE is in incl_licenses directory.

+ def sinc(x: torch.Tensor):

+ """

+ Implementation of sinc, i.e. sin(pi * x) / (pi * x)

+ __Warning__: Different to julius.sinc, the input is multiplied by `pi`!

+ """

+ return torch.where(x == 0,

+ torch.tensor(1., device=x.device, dtype=x.dtype),

+ torch.sin(math.pi * x) / math.pi / x)

+

+

+# This code is adopted from adefossez's julius.lowpass.LowPassFilters under the MIT License

+# https://adefossez.github.io/julius/julius/lowpass.html

+# LICENSE is in incl_licenses directory.

+def kaiser_sinc_filter1d(cutoff, half_width, kernel_size): # return filter [1,1,kernel_size]

+ even = (kernel_size % 2 == 0)

+ half_size = kernel_size // 2

+

+ #For kaiser window

+ delta_f = 4 * half_width

+ A = 2.285 * (half_size - 1) * math.pi * delta_f + 7.95

+ if A > 50.:

+ beta = 0.1102 * (A - 8.7)

+ elif A >= 21.:

+ beta = 0.5842 * (A - 21)**0.4 + 0.07886 * (A - 21.)

+ else:

+ beta = 0.

+ window = torch.kaiser_window(kernel_size, beta=beta, periodic=False)

+

+ # ratio = 0.5/cutoff -> 2 * cutoff = 1 / ratio

+ if even:

+ time = (torch.arange(-half_size, half_size) + 0.5)

+ else:

+ time = torch.arange(kernel_size) - half_size

+ if cutoff == 0:

+ filter_ = torch.zeros_like(time)

+ else:

+ filter_ = 2 * cutoff * window * sinc(2 * cutoff * time)

+ # Normalize filter to have sum = 1, otherwise we will have a small leakage

+ # of the constant component in the input signal.

+ filter_ /= filter_.sum()

+ filter = filter_.view(1, 1, kernel_size)

+

+ return filter

+

+

+class LowPassFilter1d(nn.Module):

+ def __init__(self,

+ cutoff=0.5,

+ half_width=0.6,

+ stride: int = 1,

+ padding: bool = True,

+ padding_mode: str = 'replicate',

+ kernel_size: int = 12):

+ # kernel_size should be even number for stylegan3 setup,

+ # in this implementation, odd number is also possible.

+ super().__init__()

+ if cutoff < -0.:

+ raise ValueError("Minimum cutoff must be larger than zero.")

+ if cutoff > 0.5:

+ raise ValueError("A cutoff above 0.5 does not make sense.")

+ self.kernel_size = kernel_size

+ self.even = (kernel_size % 2 == 0)

+ self.pad_left = kernel_size // 2 - int(self.even)

+ self.pad_right = kernel_size // 2

+ self.stride = stride

+ self.padding = padding

+ self.padding_mode = padding_mode

+ filter = kaiser_sinc_filter1d(cutoff, half_width, kernel_size)

+ self.register_buffer("filter", filter)

+

+ #input [B, C, T]

+ def forward(self, x):

+ _, C, _ = x.shape

+

+ if self.padding:

+ x = F.pad(x, (self.pad_left, self.pad_right),

+ mode=self.padding_mode)

+ out = F.conv1d(x, self.filter.expand(C, -1, -1),

+ stride=self.stride, groups=C)

+

+ return out

\ No newline at end of file

diff --git a/bigvgan/model/alias/resample.py b/bigvgan/model/alias/resample.py

new file mode 100644

index 0000000..750e6c3

--- /dev/null

+++ b/bigvgan/model/alias/resample.py

@@ -0,0 +1,49 @@

+# Adapted from https://github.com/junjun3518/alias-free-torch under the Apache License 2.0

+# LICENSE is in incl_licenses directory.

+

+import torch.nn as nn

+from torch.nn import functional as F

+from .filter import LowPassFilter1d

+from .filter import kaiser_sinc_filter1d

+

+

+class UpSample1d(nn.Module):

+ def __init__(self, ratio=2, kernel_size=None):

+ super().__init__()

+ self.ratio = ratio

+ self.kernel_size = int(6 * ratio // 2) * 2 if kernel_size is None else kernel_size

+ self.stride = ratio

+ self.pad = self.kernel_size // ratio - 1

+ self.pad_left = self.pad * self.stride + (self.kernel_size - self.stride) // 2

+ self.pad_right = self.pad * self.stride + (self.kernel_size - self.stride + 1) // 2

+ filter = kaiser_sinc_filter1d(cutoff=0.5 / ratio,

+ half_width=0.6 / ratio,

+ kernel_size=self.kernel_size)

+ self.register_buffer("filter", filter)

+

+ # x: [B, C, T]

+ def forward(self, x):

+ _, C, _ = x.shape

+

+ x = F.pad(x, (self.pad, self.pad), mode='replicate')

+ x = self.ratio * F.conv_transpose1d(

+ x, self.filter.expand(C, -1, -1), stride=self.stride, groups=C)

+ x = x[..., self.pad_left:-self.pad_right]

+

+ return x

+

+

+class DownSample1d(nn.Module):

+ def __init__(self, ratio=2, kernel_size=None):

+ super().__init__()

+ self.ratio = ratio

+ self.kernel_size = int(6 * ratio // 2) * 2 if kernel_size is None else kernel_size

+ self.lowpass = LowPassFilter1d(cutoff=0.5 / ratio,

+ half_width=0.6 / ratio,

+ stride=ratio,

+ kernel_size=self.kernel_size)

+

+ def forward(self, x):

+ xx = self.lowpass(x)

+

+ return xx

\ No newline at end of file

diff --git a/bigvgan/model/bigv.py b/bigvgan/model/bigv.py

new file mode 100644

index 0000000..029362c

--- /dev/null

+++ b/bigvgan/model/bigv.py

@@ -0,0 +1,64 @@

+import torch

+import torch.nn as nn

+

+from torch.nn import Conv1d

+from torch.nn.utils import weight_norm, remove_weight_norm

+from .alias.act import SnakeAlias

+

+

+def init_weights(m, mean=0.0, std=0.01):

+ classname = m.__class__.__name__

+ if classname.find("Conv") != -1:

+ m.weight.data.normal_(mean, std)

+

+

+def get_padding(kernel_size, dilation=1):

+ return int((kernel_size*dilation - dilation)/2)

+

+

+class AMPBlock(torch.nn.Module):

+ def __init__(self, channels, kernel_size=3, dilation=(1, 3, 5)):

+ super(AMPBlock, self).__init__()

+ self.convs1 = nn.ModuleList([

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[0],

+ padding=get_padding(kernel_size, dilation[0]))),

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[1],

+ padding=get_padding(kernel_size, dilation[1]))),

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=dilation[2],

+ padding=get_padding(kernel_size, dilation[2])))

+ ])

+ self.convs1.apply(init_weights)

+

+ self.convs2 = nn.ModuleList([

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1,

+ padding=get_padding(kernel_size, 1))),

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1,

+ padding=get_padding(kernel_size, 1))),

+ weight_norm(Conv1d(channels, channels, kernel_size, 1, dilation=1,

+ padding=get_padding(kernel_size, 1)))

+ ])

+ self.convs2.apply(init_weights)

+

+ # total number of conv layers

+ self.num_layers = len(self.convs1) + len(self.convs2)

+

+ # periodic nonlinearity with snakebeta function and anti-aliasing

+ self.activations = nn.ModuleList([

+ SnakeAlias(channels) for _ in range(self.num_layers)

+ ])

+

+ def forward(self, x):

+ acts1, acts2 = self.activations[::2], self.activations[1::2]

+ for c1, c2, a1, a2 in zip(self.convs1, self.convs2, acts1, acts2):

+ xt = a1(x)

+ xt = c1(xt)

+ xt = a2(xt)

+ xt = c2(xt)

+ x = xt + x

+ return x

+

+ def remove_weight_norm(self):

+ for l in self.convs1:

+ remove_weight_norm(l)

+ for l in self.convs2:

+ remove_weight_norm(l)

\ No newline at end of file

diff --git a/bigvgan/model/generator.py b/bigvgan/model/generator.py

new file mode 100644

index 0000000..3406c32

--- /dev/null

+++ b/bigvgan/model/generator.py

@@ -0,0 +1,143 @@

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+import numpy as np

+

+from torch.nn import Conv1d

+from torch.nn import ConvTranspose1d

+from torch.nn.utils import weight_norm

+from torch.nn.utils import remove_weight_norm

+

+from .nsf import SourceModuleHnNSF

+from .bigv import init_weights, AMPBlock, SnakeAlias

+

+

+class Generator(torch.nn.Module):

+ # this is our main BigVGAN model. Applies anti-aliased periodic activation for resblocks.

+ def __init__(self, hp):

+ super(Generator, self).__init__()

+ self.hp = hp

+ self.num_kernels = len(hp.gen.resblock_kernel_sizes)

+ self.num_upsamples = len(hp.gen.upsample_rates)

+ # pre conv

+ self.conv_pre = nn.utils.weight_norm(

+ Conv1d(hp.gen.mel_channels, hp.gen.upsample_initial_channel, 7, 1, padding=3))

+ # nsf

+ self.f0_upsamp = torch.nn.Upsample(

+ scale_factor=np.prod(hp.gen.upsample_rates))

+ self.m_source = SourceModuleHnNSF(sampling_rate=hp.audio.sampling_rate)

+ self.noise_convs = nn.ModuleList()

+ # transposed conv-based upsamplers. does not apply anti-aliasing

+ self.ups = nn.ModuleList()

+ for i, (u, k) in enumerate(zip(hp.gen.upsample_rates, hp.gen.upsample_kernel_sizes)):

+ # print(f'ups: {i} {k}, {u}, {(k - u) // 2}')

+ # base

+ self.ups.append(

+ weight_norm(

+ ConvTranspose1d(

+ hp.gen.upsample_initial_channel // (2 ** i),

+ hp.gen.upsample_initial_channel // (2 ** (i + 1)),

+ k,

+ u,

+ padding=(k - u) // 2)

+ )

+ )

+ # nsf

+ if i + 1 < len(hp.gen.upsample_rates):

+ stride_f0 = np.prod(hp.gen.upsample_rates[i + 1:])

+ stride_f0 = int(stride_f0)

+ self.noise_convs.append(

+ Conv1d(

+ 1,

+ hp.gen.upsample_initial_channel // (2 ** (i + 1)),

+ kernel_size=stride_f0 * 2,

+ stride=stride_f0,

+ padding=stride_f0 // 2,

+ )

+ )

+ else:

+ self.noise_convs.append(

+ Conv1d(1, hp.gen.upsample_initial_channel //

+ (2 ** (i + 1)), kernel_size=1)

+ )

+

+ # residual blocks using anti-aliased multi-periodicity composition modules (AMP)

+ self.resblocks = nn.ModuleList()

+ for i in range(len(self.ups)):

+ ch = hp.gen.upsample_initial_channel // (2 ** (i + 1))

+ for k, d in zip(hp.gen.resblock_kernel_sizes, hp.gen.resblock_dilation_sizes):

+ self.resblocks.append(AMPBlock(ch, k, d))

+

+ # post conv

+ self.activation_post = SnakeAlias(ch)

+ self.conv_post = Conv1d(ch, 1, 7, 1, padding=3, bias=False)

+ # weight initialization

+ self.ups.apply(init_weights)

+

+ def forward(self, x, f0, train=True):

+ # nsf

+ f0 = f0[:, None]

+ f0 = self.f0_upsamp(f0).transpose(1, 2)

+ har_source = self.m_source(f0)

+ har_source = har_source.transpose(1, 2)

+ # pre conv

+ if train:

+ x = x + torch.randn_like(x) * 0.1 # Perturbation

+ x = self.conv_pre(x)

+ x = x * torch.tanh(F.softplus(x))

+

+ for i in range(self.num_upsamples):

+ # upsampling

+ x = self.ups[i](x)

+ # nsf

+ x_source = self.noise_convs[i](har_source)

+ x = x + x_source

+ # AMP blocks

+ xs = None

+ for j in range(self.num_kernels):

+ if xs is None:

+ xs = self.resblocks[i * self.num_kernels + j](x)

+ else:

+ xs += self.resblocks[i * self.num_kernels + j](x)

+ x = xs / self.num_kernels

+

+ # post conv

+ x = self.activation_post(x)

+ x = self.conv_post(x)

+ x = torch.tanh(x)

+ return x

+

+ def remove_weight_norm(self):

+ for l in self.ups:

+ remove_weight_norm(l)

+ for l in self.resblocks:

+ l.remove_weight_norm()

+ remove_weight_norm(self.conv_pre)

+

+ def eval(self, inference=False):

+ super(Generator, self).eval()

+ # don't remove weight norm while validation in training loop

+ if inference:

+ self.remove_weight_norm()

+

+ def inference(self, mel, f0):

+ MAX_WAV_VALUE = 32768.0

+ audio = self.forward(mel, f0, False)

+ audio = audio.squeeze() # collapse all dimension except time axis

+ audio = MAX_WAV_VALUE * audio

+ audio = audio.clamp(min=-MAX_WAV_VALUE, max=MAX_WAV_VALUE-1)

+ audio = audio.short()

+ return audio

+

+ def pitch2wav(self, f0):

+ MAX_WAV_VALUE = 32768.0

+ # nsf

+ f0 = f0[:, None]

+ f0 = self.f0_upsamp(f0).transpose(1, 2)

+ har_source = self.m_source(f0)

+ audio = har_source.transpose(1, 2)

+ audio = audio.squeeze() # collapse all dimension except time axis

+ audio = MAX_WAV_VALUE * audio

+ audio = audio.clamp(min=-MAX_WAV_VALUE, max=MAX_WAV_VALUE-1)

+ audio = audio.short()

+ return audio

diff --git a/bigvgan/model/nsf.py b/bigvgan/model/nsf.py

new file mode 100644

index 0000000..1e9e6c7

--- /dev/null

+++ b/bigvgan/model/nsf.py

@@ -0,0 +1,394 @@

+import torch

+import numpy as np

+import sys

+import torch.nn.functional as torch_nn_func

+

+

+class PulseGen(torch.nn.Module):

+ """Definition of Pulse train generator

+

+ There are many ways to implement pulse generator.

+ Here, PulseGen is based on SinGen. For a perfect

+ """

+

+ def __init__(self, samp_rate, pulse_amp=0.1, noise_std=0.003, voiced_threshold=0):

+ super(PulseGen, self).__init__()

+ self.pulse_amp = pulse_amp

+ self.sampling_rate = samp_rate

+ self.voiced_threshold = voiced_threshold

+ self.noise_std = noise_std

+ self.l_sinegen = SineGen(

+ self.sampling_rate,

+ harmonic_num=0,

+ sine_amp=self.pulse_amp,

+ noise_std=0,

+ voiced_threshold=self.voiced_threshold,

+ flag_for_pulse=True,

+ )

+

+ def forward(self, f0):

+ """Pulse train generator

+ pulse_train, uv = forward(f0)

+ input F0: tensor(batchsize=1, length, dim=1)

+ f0 for unvoiced steps should be 0

+ output pulse_train: tensor(batchsize=1, length, dim)

+ output uv: tensor(batchsize=1, length, 1)

+

+ Note: self.l_sine doesn't make sure that the initial phase of

+ a voiced segment is np.pi, the first pulse in a voiced segment

+ may not be at the first time step within a voiced segment

+ """

+ with torch.no_grad():

+ sine_wav, uv, noise = self.l_sinegen(f0)

+

+ # sine without additive noise

+ pure_sine = sine_wav - noise

+

+ # step t corresponds to a pulse if

+ # sine[t] > sine[t+1] & sine[t] > sine[t-1]

+ # & sine[t-1], sine[t+1], and sine[t] are voiced

+ # or

+ # sine[t] is voiced, sine[t-1] is unvoiced

+ # we use torch.roll to simulate sine[t+1] and sine[t-1]

+ sine_1 = torch.roll(pure_sine, shifts=1, dims=1)

+ uv_1 = torch.roll(uv, shifts=1, dims=1)

+ uv_1[:, 0, :] = 0

+ sine_2 = torch.roll(pure_sine, shifts=-1, dims=1)

+ uv_2 = torch.roll(uv, shifts=-1, dims=1)

+ uv_2[:, -1, :] = 0

+

+ loc = (pure_sine > sine_1) * (pure_sine > sine_2) \

+ * (uv_1 > 0) * (uv_2 > 0) * (uv > 0) \

+ + (uv_1 < 1) * (uv > 0)

+

+ # pulse train without noise

+ pulse_train = pure_sine * loc

+

+ # additive noise to pulse train

+ # note that noise from sinegen is zero in voiced regions

+ pulse_noise = torch.randn_like(pure_sine) * self.noise_std

+

+ # with additive noise on pulse, and unvoiced regions

+ pulse_train += pulse_noise * loc + pulse_noise * (1 - uv)

+ return pulse_train, sine_wav, uv, pulse_noise

+

+

+class SignalsConv1d(torch.nn.Module):

+ """Filtering input signal with time invariant filter

+ Note: FIRFilter conducted filtering given fixed FIR weight

+ SignalsConv1d convolves two signals

+ Note: this is based on torch.nn.functional.conv1d

+

+ """

+

+ def __init__(self):

+ super(SignalsConv1d, self).__init__()

+

+ def forward(self, signal, system_ir):

+ """output = forward(signal, system_ir)

+

+ signal: (batchsize, length1, dim)

+ system_ir: (length2, dim)

+

+ output: (batchsize, length1, dim)

+ """

+ if signal.shape[-1] != system_ir.shape[-1]:

+ print("Error: SignalsConv1d expects shape:")

+ print("signal (batchsize, length1, dim)")

+ print("system_id (batchsize, length2, dim)")

+ print("But received signal: {:s}".format(str(signal.shape)))

+ print(" system_ir: {:s}".format(str(system_ir.shape)))

+ sys.exit(1)

+ padding_length = system_ir.shape[0] - 1

+ groups = signal.shape[-1]

+

+ # pad signal on the left

+ signal_pad = torch_nn_func.pad(signal.permute(0, 2, 1), (padding_length, 0))

+ # prepare system impulse response as (dim, 1, length2)

+ # also flip the impulse response

+ ir = torch.flip(system_ir.unsqueeze(1).permute(2, 1, 0), dims=[2])

+ # convolute

+ output = torch_nn_func.conv1d(signal_pad, ir, groups=groups)

+ return output.permute(0, 2, 1)

+

+

+class CyclicNoiseGen_v1(torch.nn.Module):

+ """CyclicnoiseGen_v1

+ Cyclic noise with a single parameter of beta.

+ Pytorch v1 implementation assumes f_t is also fixed

+ """

+

+ def __init__(self, samp_rate, noise_std=0.003, voiced_threshold=0):

+ super(CyclicNoiseGen_v1, self).__init__()

+ self.samp_rate = samp_rate

+ self.noise_std = noise_std

+ self.voiced_threshold = voiced_threshold

+

+ self.l_pulse = PulseGen(

+ samp_rate,

+ pulse_amp=1.0,

+ noise_std=noise_std,

+ voiced_threshold=voiced_threshold,

+ )

+ self.l_conv = SignalsConv1d()

+

+ def noise_decay(self, beta, f0mean):

+ """decayed_noise = noise_decay(beta, f0mean)

+ decayed_noise = n[t]exp(-t * f_mean / beta / samp_rate)

+

+ beta: (dim=1) or (batchsize=1, 1, dim=1)

+ f0mean (batchsize=1, 1, dim=1)

+

+ decayed_noise (batchsize=1, length, dim=1)

+ """

+ with torch.no_grad():

+ # exp(-1.0 n / T) < 0.01 => n > -log(0.01)*T = 4.60*T

+ # truncate the noise when decayed by -40 dB

+ length = 4.6 * self.samp_rate / f0mean

+ length = length.int()

+ time_idx = torch.arange(0, length, device=beta.device)

+ time_idx = time_idx.unsqueeze(0).unsqueeze(2)

+ time_idx = time_idx.repeat(beta.shape[0], 1, beta.shape[2])

+

+ noise = torch.randn(time_idx.shape, device=beta.device)

+

+ # due to Pytorch implementation, use f0_mean as the f0 factor

+ decay = torch.exp(-time_idx * f0mean / beta / self.samp_rate)

+ return noise * self.noise_std * decay

+

+ def forward(self, f0s, beta):

+ """Producde cyclic-noise"""

+ # pulse train

+ pulse_train, sine_wav, uv, noise = self.l_pulse(f0s)

+ pure_pulse = pulse_train - noise

+

+ # decayed_noise (length, dim=1)

+ if (uv < 1).all():

+ # all unvoiced

+ cyc_noise = torch.zeros_like(sine_wav)

+ else:

+ f0mean = f0s[uv > 0].mean()

+

+ decayed_noise = self.noise_decay(beta, f0mean)[0, :, :]

+ # convolute

+ cyc_noise = self.l_conv(pure_pulse, decayed_noise)

+

+ # add noise in invoiced segments

+ cyc_noise = cyc_noise + noise * (1.0 - uv)

+ return cyc_noise, pulse_train, sine_wav, uv, noise

+

+

+class SineGen(torch.nn.Module):

+ """Definition of sine generator

+ SineGen(samp_rate, harmonic_num = 0,

+ sine_amp = 0.1, noise_std = 0.003,

+ voiced_threshold = 0,

+ flag_for_pulse=False)

+

+ samp_rate: sampling rate in Hz

+ harmonic_num: number of harmonic overtones (default 0)

+ sine_amp: amplitude of sine-wavefrom (default 0.1)

+ noise_std: std of Gaussian noise (default 0.003)

+ voiced_thoreshold: F0 threshold for U/V classification (default 0)

+ flag_for_pulse: this SinGen is used inside PulseGen (default False)

+

+ Note: when flag_for_pulse is True, the first time step of a voiced

+ segment is always sin(np.pi) or cos(0)

+ """

+

+ def __init__(

+ self,

+ samp_rate,

+ harmonic_num=0,

+ sine_amp=0.1,

+ noise_std=0.003,

+ voiced_threshold=0,

+ flag_for_pulse=False,

+ ):

+ super(SineGen, self).__init__()

+ self.sine_amp = sine_amp

+ self.noise_std = noise_std

+ self.harmonic_num = harmonic_num

+ self.dim = self.harmonic_num + 1

+ self.sampling_rate = samp_rate

+ self.voiced_threshold = voiced_threshold

+ self.flag_for_pulse = flag_for_pulse

+

+ def _f02uv(self, f0):

+ # generate uv signal

+ uv = torch.ones_like(f0)

+ uv = uv * (f0 > self.voiced_threshold)

+ return uv

+

+ def _f02sine(self, f0_values):

+ """f0_values: (batchsize, length, dim)

+ where dim indicates fundamental tone and overtones

+ """

+ # convert to F0 in rad. The interger part n can be ignored

+ # because 2 * np.pi * n doesn't affect phase

+ rad_values = (f0_values / self.sampling_rate) % 1

+

+ # initial phase noise (no noise for fundamental component)

+ rand_ini = torch.rand(

+ f0_values.shape[0], f0_values.shape[2], device=f0_values.device

+ )

+ rand_ini[:, 0] = 0

+ rad_values[:, 0, :] = rad_values[:, 0, :] + rand_ini

+

+ # instantanouse phase sine[t] = sin(2*pi \sum_i=1 ^{t} rad)

+ if not self.flag_for_pulse:

+ # for normal case

+

+ # To prevent torch.cumsum numerical overflow,

+ # it is necessary to add -1 whenever \sum_k=1^n rad_value_k > 1.

+ # Buffer tmp_over_one_idx indicates the time step to add -1.

+ # This will not change F0 of sine because (x-1) * 2*pi = x * 2*pi

+ tmp_over_one = torch.cumsum(rad_values, 1) % 1

+ tmp_over_one_idx = (tmp_over_one[:, 1:, :] - tmp_over_one[:, :-1, :]) < 0

+ cumsum_shift = torch.zeros_like(rad_values)

+ cumsum_shift[:, 1:, :] = tmp_over_one_idx * -1.0

+

+ sines = torch.sin(

+ torch.cumsum(rad_values + cumsum_shift, dim=1) * 2 * np.pi

+ )

+ else:

+ # If necessary, make sure that the first time step of every

+ # voiced segments is sin(pi) or cos(0)

+ # This is used for pulse-train generation

+

+ # identify the last time step in unvoiced segments

+ uv = self._f02uv(f0_values)

+ uv_1 = torch.roll(uv, shifts=-1, dims=1)

+ uv_1[:, -1, :] = 1

+ u_loc = (uv < 1) * (uv_1 > 0)

+

+ # get the instantanouse phase

+ tmp_cumsum = torch.cumsum(rad_values, dim=1)

+ # different batch needs to be processed differently

+ for idx in range(f0_values.shape[0]):

+ temp_sum = tmp_cumsum[idx, u_loc[idx, :, 0], :]

+ temp_sum[1:, :] = temp_sum[1:, :] - temp_sum[0:-1, :]

+ # stores the accumulation of i.phase within

+ # each voiced segments

+ tmp_cumsum[idx, :, :] = 0

+ tmp_cumsum[idx, u_loc[idx, :, 0], :] = temp_sum

+

+ # rad_values - tmp_cumsum: remove the accumulation of i.phase

+ # within the previous voiced segment.

+ i_phase = torch.cumsum(rad_values - tmp_cumsum, dim=1)

+

+ # get the sines

+ sines = torch.cos(i_phase * 2 * np.pi)

+ return sines

+

+ def forward(self, f0):

+ """sine_tensor, uv = forward(f0)

+ input F0: tensor(batchsize=1, length, dim=1)

+ f0 for unvoiced steps should be 0

+ output sine_tensor: tensor(batchsize=1, length, dim)

+ output uv: tensor(batchsize=1, length, 1)

+ """

+ with torch.no_grad():

+ f0_buf = torch.zeros(f0.shape[0], f0.shape[1], self.dim, device=f0.device)

+ # fundamental component

+ f0_buf[:, :, 0] = f0[:, :, 0]

+ for idx in np.arange(self.harmonic_num):

+ # idx + 2: the (idx+1)-th overtone, (idx+2)-th harmonic

+ f0_buf[:, :, idx + 1] = f0_buf[:, :, 0] * (idx + 2)

+

+ # generate sine waveforms

+ sine_waves = self._f02sine(f0_buf) * self.sine_amp

+

+ # generate uv signal

+ # uv = torch.ones(f0.shape)

+ # uv = uv * (f0 > self.voiced_threshold)

+ uv = self._f02uv(f0)

+

+ # noise: for unvoiced should be similar to sine_amp

+ # std = self.sine_amp/3 -> max value ~ self.sine_amp

+ # . for voiced regions is self.noise_std

+ noise_amp = uv * self.noise_std + (1 - uv) * self.sine_amp / 3

+ noise = noise_amp * torch.randn_like(sine_waves)

+

+ # first: set the unvoiced part to 0 by uv

+ # then: additive noise

+ sine_waves = sine_waves * uv + noise

+ return sine_waves

+

+

+class SourceModuleCycNoise_v1(torch.nn.Module):

+ """SourceModuleCycNoise_v1

+ SourceModule(sampling_rate, noise_std=0.003, voiced_threshod=0)

+ sampling_rate: sampling_rate in Hz

+

+ noise_std: std of Gaussian noise (default: 0.003)

+ voiced_threshold: threshold to set U/V given F0 (default: 0)

+

+ cyc, noise, uv = SourceModuleCycNoise_v1(F0_upsampled, beta)

+ F0_upsampled (batchsize, length, 1)

+ beta (1)

+ cyc (batchsize, length, 1)

+ noise (batchsize, length, 1)

+ uv (batchsize, length, 1)

+ """

+

+ def __init__(self, sampling_rate, noise_std=0.003, voiced_threshod=0):

+ super(SourceModuleCycNoise_v1, self).__init__()

+ self.sampling_rate = sampling_rate

+ self.noise_std = noise_std

+ self.l_cyc_gen = CyclicNoiseGen_v1(sampling_rate, noise_std, voiced_threshod)

+

+ def forward(self, f0_upsamped, beta):

+ """

+ cyc, noise, uv = SourceModuleCycNoise_v1(F0, beta)

+ F0_upsampled (batchsize, length, 1)

+ beta (1)

+ cyc (batchsize, length, 1)

+ noise (batchsize, length, 1)

+ uv (batchsize, length, 1)

+ """

+ # source for harmonic branch

+ cyc, pulse, sine, uv, add_noi = self.l_cyc_gen(f0_upsamped, beta)

+

+ # source for noise branch, in the same shape as uv

+ noise = torch.randn_like(uv) * self.noise_std / 3

+ return cyc, noise, uv

+

+

+class SourceModuleHnNSF(torch.nn.Module):

+ def __init__(

+ self,

+ sampling_rate=32000,

+ sine_amp=0.1,

+ add_noise_std=0.003,

+ voiced_threshod=0,

+ ):

+ super(SourceModuleHnNSF, self).__init__()

+ harmonic_num = 10

+ self.sine_amp = sine_amp

+ self.noise_std = add_noise_std

+

+ # to produce sine waveforms

+ self.l_sin_gen = SineGen(

+ sampling_rate, harmonic_num, sine_amp, add_noise_std, voiced_threshod

+ )

+

+ # to merge source harmonics into a single excitation

+ self.l_tanh = torch.nn.Tanh()

+ self.register_buffer('merge_w', torch.FloatTensor([[

+ 0.2942, -0.2243, 0.0033, -0.0056, -0.0020, -0.0046,

+ 0.0221, -0.0083, -0.0241, -0.0036, -0.0581]]))

+ self.register_buffer('merge_b', torch.FloatTensor([0.0008]))

+

+ def forward(self, x):

+ """

+ Sine_source = SourceModuleHnNSF(F0_sampled)

+ F0_sampled (batchsize, length, 1)

+ Sine_source (batchsize, length, 1)

+ """

+ # source for harmonic branch

+ sine_wavs = self.l_sin_gen(x)

+ sine_wavs = torch_nn_func.linear(

+ sine_wavs, self.merge_w) + self.merge_b

+ sine_merge = self.l_tanh(sine_wavs)

+ return sine_merge

diff --git a/bigvgan_pretrain/README.md b/bigvgan_pretrain/README.md

new file mode 100644

index 0000000..0bf434e

--- /dev/null

+++ b/bigvgan_pretrain/README.md

@@ -0,0 +1,5 @@

+Path for:

+

+ nsf_bigvgan_pretrain_32K.pth

+

+ DownLoad link:https://github.com/PlayVoice/NSF-BigVGAN/releases/tag/augment

diff --git a/configs/base.yaml b/configs/base.yaml

new file mode 100644

index 0000000..a7e52cc

--- /dev/null

+++ b/configs/base.yaml

@@ -0,0 +1,40 @@

+train:

+ seed: 37

+ train_files: "files/train.txt"

+ valid_files: "files/valid.txt"

+ log_dir: 'logs/grad_svc'

+ n_epochs: 10000

+ learning_rate: 1e-4

+ batch_size: 16

+ test_size: 4

+ test_step: 1

+ save_step: 1

+ pretrain: ""

+#############################

+data:

+ segment_size: 16000 # WARNING: base on hop_length

+ max_wav_value: 32768.0

+ sampling_rate: 32000

+ filter_length: 1024

+ hop_length: 320

+ win_length: 1024

+ mel_channels: 100

+ mel_fmin: 40.0

+ mel_fmax: 16000.0

+#############################

+grad:

+ n_mels: 100

+ n_vecs: 256

+ n_pits: 256

+ n_spks: 256

+ n_embs: 64

+

+ # encoder parameters

+ n_enc_channels: 192

+ filter_channels: 768

+

+ # decoder parameters

+ dec_dim: 64

+ beta_min: 0.05

+ beta_max: 20.0

+ pe_scale: 1000

diff --git a/grad/LICENSE b/grad/LICENSE

new file mode 100644

index 0000000..e1c1351

--- /dev/null

+++ b/grad/LICENSE

@@ -0,0 +1,19 @@

+Copyright (c) 2021 Huawei Technologies Co., Ltd.

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

\ No newline at end of file

diff --git a/grad/__init__.py b/grad/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/grad/base.py b/grad/base.py

new file mode 100644

index 0000000..7294dcb

--- /dev/null

+++ b/grad/base.py

@@ -0,0 +1,29 @@

+import numpy as np

+import torch

+

+

+class BaseModule(torch.nn.Module):

+ def __init__(self):

+ super(BaseModule, self).__init__()

+

+ @property

+ def nparams(self):

+ """

+ Returns number of trainable parameters of the module.

+ """

+ num_params = 0

+ for name, param in self.named_parameters():

+ if param.requires_grad:

+ num_params += np.prod(param.detach().cpu().numpy().shape)

+ return num_params

+

+

+ def relocate_input(self, x: list):

+ """

+ Relocates provided tensors to the same device set for the module.

+ """

+ device = next(self.parameters()).device

+ for i in range(len(x)):

+ if isinstance(x[i], torch.Tensor) and x[i].device != device:

+ x[i] = x[i].to(device)

+ return x

diff --git a/grad/diffusion.py b/grad/diffusion.py

new file mode 100644

index 0000000..3462999

--- /dev/null

+++ b/grad/diffusion.py

@@ -0,0 +1,273 @@

+import math

+import torch

+from einops import rearrange

+from grad.base import BaseModule

+

+

+class Mish(BaseModule):

+ def forward(self, x):

+ return x * torch.tanh(torch.nn.functional.softplus(x))

+

+

+class Upsample(BaseModule):

+ def __init__(self, dim):

+ super(Upsample, self).__init__()

+ self.conv = torch.nn.ConvTranspose2d(dim, dim, 4, 2, 1)

+

+ def forward(self, x):

+ return self.conv(x)

+

+

+class Downsample(BaseModule):

+ def __init__(self, dim):

+ super(Downsample, self).__init__()

+ self.conv = torch.nn.Conv2d(dim, dim, 3, 2, 1)

+

+ def forward(self, x):

+ return self.conv(x)

+

+

+class Rezero(BaseModule):

+ def __init__(self, fn):

+ super(Rezero, self).__init__()

+ self.fn = fn

+ self.g = torch.nn.Parameter(torch.zeros(1))

+

+ def forward(self, x):

+ return self.fn(x) * self.g

+

+

+class Block(BaseModule):

+ def __init__(self, dim, dim_out, groups=8):

+ super(Block, self).__init__()

+ self.block = torch.nn.Sequential(torch.nn.Conv2d(dim, dim_out, 3,

+ padding=1), torch.nn.GroupNorm(

+ groups, dim_out), Mish())

+

+ def forward(self, x, mask):

+ output = self.block(x * mask)

+ return output * mask

+

+

+class ResnetBlock(BaseModule):

+ def __init__(self, dim, dim_out, time_emb_dim, groups=8):

+ super(ResnetBlock, self).__init__()

+ self.mlp = torch.nn.Sequential(Mish(), torch.nn.Linear(time_emb_dim,

+ dim_out))

+

+ self.block1 = Block(dim, dim_out, groups=groups)

+ self.block2 = Block(dim_out, dim_out, groups=groups)

+ if dim != dim_out:

+ self.res_conv = torch.nn.Conv2d(dim, dim_out, 1)

+ else:

+ self.res_conv = torch.nn.Identity()

+

+ def forward(self, x, mask, time_emb):

+ h = self.block1(x, mask)

+ h += self.mlp(time_emb).unsqueeze(-1).unsqueeze(-1)

+ h = self.block2(h, mask)

+ output = h + self.res_conv(x * mask)

+ return output

+

+

+class LinearAttention(BaseModule):

+ def __init__(self, dim, heads=4, dim_head=32):

+ super(LinearAttention, self).__init__()

+ self.heads = heads

+ hidden_dim = dim_head * heads

+ self.to_qkv = torch.nn.Conv2d(dim, hidden_dim * 3, 1, bias=False)

+ self.to_out = torch.nn.Conv2d(hidden_dim, dim, 1)

+

+ def forward(self, x):

+ b, c, h, w = x.shape

+ qkv = self.to_qkv(x)

+ q, k, v = rearrange(qkv, 'b (qkv heads c) h w -> qkv b heads c (h w)',

+ heads = self.heads, qkv=3)

+ k = k.softmax(dim=-1)

+ context = torch.einsum('bhdn,bhen->bhde', k, v)

+ out = torch.einsum('bhde,bhdn->bhen', context, q)

+ out = rearrange(out, 'b heads c (h w) -> b (heads c) h w',

+ heads=self.heads, h=h, w=w)

+ return self.to_out(out)

+

+

+class Residual(BaseModule):

+ def __init__(self, fn):

+ super(Residual, self).__init__()

+ self.fn = fn

+

+ def forward(self, x, *args, **kwargs):

+ output = self.fn(x, *args, **kwargs) + x

+ return output

+

+

+class SinusoidalPosEmb(BaseModule):

+ def __init__(self, dim):

+ super(SinusoidalPosEmb, self).__init__()

+ self.dim = dim

+

+ def forward(self, x, scale=1000):

+ device = x.device

+ half_dim = self.dim // 2

+ emb = math.log(10000) / (half_dim - 1)

+ emb = torch.exp(torch.arange(half_dim, device=device).float() * -emb)

+ emb = scale * x.unsqueeze(1) * emb.unsqueeze(0)

+ emb = torch.cat((emb.sin(), emb.cos()), dim=-1)

+ return emb

+

+

+class GradLogPEstimator2d(BaseModule):

+ def __init__(self, dim, dim_mults=(1, 2, 4), emb_dim=64, n_mels=100,

+ groups=8, pe_scale=1000):

+ super(GradLogPEstimator2d, self).__init__()

+ self.dim = dim

+ self.dim_mults = dim_mults

+ self.emb_dim = emb_dim

+ self.groups = groups

+ self.pe_scale = pe_scale

+

+ self.spk_mlp = torch.nn.Sequential(torch.nn.Linear(emb_dim, emb_dim * 4), Mish(),

+ torch.nn.Linear(emb_dim * 4, n_mels))

+ self.time_pos_emb = SinusoidalPosEmb(dim)

+ self.mlp = torch.nn.Sequential(torch.nn.Linear(dim, dim * 4), Mish(),

+ torch.nn.Linear(dim * 4, dim))

+

+ dims = [2 + 1, *map(lambda m: dim * m, dim_mults)]

+ in_out = list(zip(dims[:-1], dims[1:]))

+ self.downs = torch.nn.ModuleList([])

+ self.ups = torch.nn.ModuleList([])

+ num_resolutions = len(in_out)

+

+ for ind, (dim_in, dim_out) in enumerate(in_out): # 2 downs

+ is_last = ind >= (num_resolutions - 1)

+ self.downs.append(torch.nn.ModuleList([

+ ResnetBlock(dim_in, dim_out, time_emb_dim=dim),

+ ResnetBlock(dim_out, dim_out, time_emb_dim=dim),

+ Residual(Rezero(LinearAttention(dim_out))),

+ Downsample(dim_out) if not is_last else torch.nn.Identity()]))

+

+ mid_dim = dims[-1]

+ self.mid_block1 = ResnetBlock(mid_dim, mid_dim, time_emb_dim=dim)

+ self.mid_attn = Residual(Rezero(LinearAttention(mid_dim)))

+ self.mid_block2 = ResnetBlock(mid_dim, mid_dim, time_emb_dim=dim)

+

+ for ind, (dim_in, dim_out) in enumerate(reversed(in_out[1:])): # 2 ups

+ self.ups.append(torch.nn.ModuleList([

+ ResnetBlock(dim_out * 2, dim_in, time_emb_dim=dim),

+ ResnetBlock(dim_in, dim_in, time_emb_dim=dim),

+ Residual(Rezero(LinearAttention(dim_in))),

+ Upsample(dim_in)]))

+ self.final_block = Block(dim, dim)

+ self.final_conv = torch.nn.Conv2d(dim, 1, 1)

+

+ def forward(self, spk, x, mask, mu, t):

+ s = self.spk_mlp(spk)

+

+ t = self.time_pos_emb(t, scale=self.pe_scale)

+ t = self.mlp(t)

+

+ s = s.unsqueeze(-1).repeat(1, 1, x.shape[-1])

+ x = torch.stack([mu, x, s], 1)

+ mask = mask.unsqueeze(1)

+

+ hiddens = []

+ masks = [mask]

+ for resnet1, resnet2, attn, downsample in self.downs:

+ mask_down = masks[-1]

+ x = resnet1(x, mask_down, t)

+ x = resnet2(x, mask_down, t)

+ x = attn(x)

+ hiddens.append(x)

+ x = downsample(x * mask_down)

+ masks.append(mask_down[:, :, :, ::2])

+

+ masks = masks[:-1]

+ mask_mid = masks[-1]

+ x = self.mid_block1(x, mask_mid, t)

+ x = self.mid_attn(x)

+ x = self.mid_block2(x, mask_mid, t)

+

+ for resnet1, resnet2, attn, upsample in self.ups:

+ mask_up = masks.pop()

+ x = torch.cat((x, hiddens.pop()), dim=1)

+ x = resnet1(x, mask_up, t)

+ x = resnet2(x, mask_up, t)

+ x = attn(x)

+ x = upsample(x * mask_up)

+

+ x = self.final_block(x, mask)

+ output = self.final_conv(x * mask)

+

+ return (output * mask).squeeze(1)

+

+

+def get_noise(t, beta_init, beta_term, cumulative=False):

+ if cumulative:

+ noise = beta_init*t + 0.5*(beta_term - beta_init)*(t**2)

+ else:

+ noise = beta_init + (beta_term - beta_init)*t

+ return noise

+

+

+class Diffusion(BaseModule):

+ def __init__(self, n_mels, dim, emb_dim=64,

+ beta_min=0.05, beta_max=20, pe_scale=1000):

+ super(Diffusion, self).__init__()

+ self.n_mels = n_mels

+ self.beta_min = beta_min

+ self.beta_max = beta_max

+ self.estimator = GradLogPEstimator2d(dim,

+ n_mels=n_mels,

+ emb_dim=emb_dim,

+ pe_scale=pe_scale)

+

+ def forward_diffusion(self, mel, mask, mu, t):

+ time = t.unsqueeze(-1).unsqueeze(-1)

+ cum_noise = get_noise(time, self.beta_min, self.beta_max, cumulative=True)

+ mean = mel*torch.exp(-0.5*cum_noise) + mu*(1.0 - torch.exp(-0.5*cum_noise))

+ variance = 1.0 - torch.exp(-cum_noise)

+ z = torch.randn(mel.shape, dtype=mel.dtype, device=mel.device,

+ requires_grad=False)

+ xt = mean + z * torch.sqrt(variance)

+ return xt * mask, z * mask

+

+ @torch.no_grad()

+ def reverse_diffusion(self, spk, z, mask, mu, n_timesteps, stoc=False):

+ h = 1.0 / n_timesteps

+ xt = z * mask

+ for i in range(n_timesteps):

+ t = (1.0 - (i + 0.5)*h) * torch.ones(z.shape[0], dtype=z.dtype,

+ device=z.device)

+ time = t.unsqueeze(-1).unsqueeze(-1)

+ noise_t = get_noise(time, self.beta_min, self.beta_max,

+ cumulative=False)

+ if stoc: # adds stochastic term

+ dxt_det = 0.5 * (mu - xt) - self.estimator(spk, xt, mask, mu, t)

+ dxt_det = dxt_det * noise_t * h

+ dxt_stoc = torch.randn(z.shape, dtype=z.dtype, device=z.device,

+ requires_grad=False)

+ dxt_stoc = dxt_stoc * torch.sqrt(noise_t * h)

+ dxt = dxt_det + dxt_stoc

+ else:

+ dxt = 0.5 * (mu - xt - self.estimator(spk, xt, mask, mu, t))

+ dxt = dxt * noise_t * h

+ xt = (xt - dxt) * mask

+ return xt

+

+ @torch.no_grad()

+ def forward(self, spk, z, mask, mu, n_timesteps, stoc=False):

+ return self.reverse_diffusion(spk, z, mask, mu, n_timesteps, stoc)

+

+ def loss_t(self, spk, mel, mask, mu, t):

+ xt, z = self.forward_diffusion(mel, mask, mu, t)

+ time = t.unsqueeze(-1).unsqueeze(-1)

+ cum_noise = get_noise(time, self.beta_min, self.beta_max, cumulative=True)

+ noise_estimation = self.estimator(spk, xt, mask, mu, t)

+ noise_estimation *= torch.sqrt(1.0 - torch.exp(-cum_noise))

+ loss = torch.sum((noise_estimation + z)**2) / (torch.sum(mask)*self.n_mels)

+ return loss, xt

+

+ def compute_loss(self, spk, mel, mask, mu, offset=1e-5):

+ t = torch.rand(mel.shape[0], dtype=mel.dtype, device=mel.device, requires_grad=False)

+ t = torch.clamp(t, offset, 1.0 - offset)

+ return self.loss_t(spk, mel, mask, mu, t)

diff --git a/grad/encoder.py b/grad/encoder.py

new file mode 100644

index 0000000..cb1e118

--- /dev/null

+++ b/grad/encoder.py

@@ -0,0 +1,300 @@

+import math

+import torch

+

+from grad.base import BaseModule

+from grad.utils import sequence_mask, convert_pad_shape

+

+

+class LayerNorm(BaseModule):

+ def __init__(self, channels, eps=1e-4):

+ super(LayerNorm, self).__init__()

+ self.channels = channels

+ self.eps = eps

+

+ self.gamma = torch.nn.Parameter(torch.ones(channels))

+ self.beta = torch.nn.Parameter(torch.zeros(channels))

+

+ def forward(self, x):

+ n_dims = len(x.shape)

+ mean = torch.mean(x, 1, keepdim=True)

+ variance = torch.mean((x - mean)**2, 1, keepdim=True)

+

+ x = (x - mean) * torch.rsqrt(variance + self.eps)

+

+ shape = [1, -1] + [1] * (n_dims - 2)

+ x = x * self.gamma.view(*shape) + self.beta.view(*shape)

+ return x

+

+

+class ConvReluNorm(BaseModule):

+ def __init__(self, in_channels, hidden_channels, out_channels, kernel_size,

+ n_layers, p_dropout):

+ super(ConvReluNorm, self).__init__()

+ self.in_channels = in_channels

+ self.hidden_channels = hidden_channels

+ self.out_channels = out_channels

+ self.kernel_size = kernel_size

+ self.n_layers = n_layers

+ self.p_dropout = p_dropout

+

+ self.conv_pre = torch.nn.Conv1d(in_channels, hidden_channels,

+ kernel_size, padding=kernel_size//2)

+ self.conv_layers = torch.nn.ModuleList()

+ self.norm_layers = torch.nn.ModuleList()

+ self.conv_layers.append(torch.nn.Conv1d(hidden_channels, hidden_channels,

+ kernel_size, padding=kernel_size//2))

+ self.norm_layers.append(LayerNorm(hidden_channels))

+ self.relu_drop = torch.nn.Sequential(torch.nn.ReLU(), torch.nn.Dropout(p_dropout))

+ for _ in range(n_layers - 1):

+ self.conv_layers.append(torch.nn.Conv1d(hidden_channels, hidden_channels,

+ kernel_size, padding=kernel_size//2))

+ self.norm_layers.append(LayerNorm(hidden_channels))

+ self.proj = torch.nn.Conv1d(hidden_channels, out_channels, 1)

+ self.proj.weight.data.zero_()

+ self.proj.bias.data.zero_()

+

+ def forward(self, x, x_mask):

+ x = self.conv_pre(x)

+ x_org = x

+ for i in range(self.n_layers):

+ x = self.conv_layers[i](x * x_mask)

+ x = self.norm_layers[i](x)

+ x = self.relu_drop(x)

+ x = x_org + self.proj(x)

+ return x * x_mask

+

+

+class MultiHeadAttention(BaseModule):

+ def __init__(self, channels, out_channels, n_heads, window_size=None,

+ heads_share=True, p_dropout=0.0, proximal_bias=False,

+ proximal_init=False):

+ super(MultiHeadAttention, self).__init__()

+ assert channels % n_heads == 0

+

+ self.channels = channels

+ self.out_channels = out_channels

+ self.n_heads = n_heads

+ self.window_size = window_size

+ self.heads_share = heads_share

+ self.proximal_bias = proximal_bias

+ self.p_dropout = p_dropout

+ self.attn = None

+

+ self.k_channels = channels // n_heads

+ self.conv_q = torch.nn.Conv1d(channels, channels, 1)

+ self.conv_k = torch.nn.Conv1d(channels, channels, 1)

+ self.conv_v = torch.nn.Conv1d(channels, channels, 1)

+ if window_size is not None:

+ n_heads_rel = 1 if heads_share else n_heads

+ rel_stddev = self.k_channels**-0.5

+ self.emb_rel_k = torch.nn.Parameter(torch.randn(n_heads_rel,

+ window_size * 2 + 1, self.k_channels) * rel_stddev)

+ self.emb_rel_v = torch.nn.Parameter(torch.randn(n_heads_rel,

+ window_size * 2 + 1, self.k_channels) * rel_stddev)

+ self.conv_o = torch.nn.Conv1d(channels, out_channels, 1)

+ self.drop = torch.nn.Dropout(p_dropout)

+

+ torch.nn.init.xavier_uniform_(self.conv_q.weight)

+ torch.nn.init.xavier_uniform_(self.conv_k.weight)

+ if proximal_init:

+ self.conv_k.weight.data.copy_(self.conv_q.weight.data)

+ self.conv_k.bias.data.copy_(self.conv_q.bias.data)

+ torch.nn.init.xavier_uniform_(self.conv_v.weight)

+

+ def forward(self, x, c, attn_mask=None):

+ q = self.conv_q(x)

+ k = self.conv_k(c)

+ v = self.conv_v(c)

+

+ x, self.attn = self.attention(q, k, v, mask=attn_mask)

+

+ x = self.conv_o(x)

+ return x

+

+ def attention(self, query, key, value, mask=None):

+ b, d, t_s, t_t = (*key.size(), query.size(2))

+ query = query.view(b, self.n_heads, self.k_channels, t_t).transpose(2, 3)

+ key = key.view(b, self.n_heads, self.k_channels, t_s).transpose(2, 3)

+ value = value.view(b, self.n_heads, self.k_channels, t_s).transpose(2, 3)

+

+ scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(self.k_channels)

+ if self.window_size is not None:

+ assert t_s == t_t, "Relative attention is only available for self-attention."

+ key_relative_embeddings = self._get_relative_embeddings(self.emb_rel_k, t_s)

+ rel_logits = self._matmul_with_relative_keys(query, key_relative_embeddings)

+ rel_logits = self._relative_position_to_absolute_position(rel_logits)

+ scores_local = rel_logits / math.sqrt(self.k_channels)

+ scores = scores + scores_local

+ if self.proximal_bias:

+ assert t_s == t_t, "Proximal bias is only available for self-attention."

+ scores = scores + self._attention_bias_proximal(t_s).to(device=scores.device,

+ dtype=scores.dtype)

+ if mask is not None:

+ scores = scores.masked_fill(mask == 0, -1e4)

+ p_attn = torch.nn.functional.softmax(scores, dim=-1)

+ p_attn = self.drop(p_attn)

+ output = torch.matmul(p_attn, value)

+ if self.window_size is not None:

+ relative_weights = self._absolute_position_to_relative_position(p_attn)

+ value_relative_embeddings = self._get_relative_embeddings(self.emb_rel_v, t_s)

+ output = output + self._matmul_with_relative_values(relative_weights,

+ value_relative_embeddings)

+ output = output.transpose(2, 3).contiguous().view(b, d, t_t)

+ return output, p_attn

+

+ def _matmul_with_relative_values(self, x, y):

+ ret = torch.matmul(x, y.unsqueeze(0))

+ return ret

+

+ def _matmul_with_relative_keys(self, x, y):

+ ret = torch.matmul(x, y.unsqueeze(0).transpose(-2, -1))

+ return ret

+

+ def _get_relative_embeddings(self, relative_embeddings, length):

+ pad_length = max(length - (self.window_size + 1), 0)

+ slice_start_position = max((self.window_size + 1) - length, 0)

+ slice_end_position = slice_start_position + 2 * length - 1

+ if pad_length > 0:

+ padded_relative_embeddings = torch.nn.functional.pad(

+ relative_embeddings, convert_pad_shape([[0, 0],

+ [pad_length, pad_length], [0, 0]]))

+ else:

+ padded_relative_embeddings = relative_embeddings

+ used_relative_embeddings = padded_relative_embeddings[:,

+ slice_start_position:slice_end_position]

+ return used_relative_embeddings

+

+ def _relative_position_to_absolute_position(self, x):

+ batch, heads, length, _ = x.size()

+ x = torch.nn.functional.pad(x, convert_pad_shape([[0,0],[0,0],[0,0],[0,1]]))

+ x_flat = x.view([batch, heads, length * 2 * length])

+ x_flat = torch.nn.functional.pad(x_flat, convert_pad_shape([[0,0],[0,0],[0,length-1]]))

+ x_final = x_flat.view([batch, heads, length+1, 2*length-1])[:, :, :length, length-1:]

+ return x_final

+

+ def _absolute_position_to_relative_position(self, x):

+ batch, heads, length, _ = x.size()

+ x = torch.nn.functional.pad(x, convert_pad_shape([[0, 0], [0, 0], [0, 0], [0, length-1]]))

+ x_flat = x.view([batch, heads, length**2 + length*(length - 1)])

+ x_flat = torch.nn.functional.pad(x_flat, convert_pad_shape([[0, 0], [0, 0], [length, 0]]))

+ x_final = x_flat.view([batch, heads, length, 2*length])[:,:,:,1:]

+ return x_final

+

+ def _attention_bias_proximal(self, length):

+ r = torch.arange(length, dtype=torch.float32)

+ diff = torch.unsqueeze(r, 0) - torch.unsqueeze(r, 1)

+ return torch.unsqueeze(torch.unsqueeze(-torch.log1p(torch.abs(diff)), 0), 0)

+

+

+class FFN(BaseModule):

+ def __init__(self, in_channels, out_channels, filter_channels, kernel_size,

+ p_dropout=0.0):

+ super(FFN, self).__init__()

+ self.in_channels = in_channels

+ self.out_channels = out_channels

+ self.filter_channels = filter_channels

+ self.kernel_size = kernel_size

+ self.p_dropout = p_dropout

+

+ self.conv_1 = torch.nn.Conv1d(in_channels, filter_channels, kernel_size,

+ padding=kernel_size//2)

+ self.conv_2 = torch.nn.Conv1d(filter_channels, out_channels, kernel_size,

+ padding=kernel_size//2)

+ self.drop = torch.nn.Dropout(p_dropout)

+

+ def forward(self, x, x_mask):

+ x = self.conv_1(x * x_mask)

+ x = torch.relu(x)

+ x = self.drop(x)

+ x = self.conv_2(x * x_mask)

+ return x * x_mask

+

+

+class Encoder(BaseModule):

+ def __init__(self, hidden_channels, filter_channels, n_heads, n_layers,

+ kernel_size=1, p_dropout=0.0, window_size=None, **kwargs):

+ super(Encoder, self).__init__()

+ self.hidden_channels = hidden_channels

+ self.filter_channels = filter_channels

+ self.n_heads = n_heads

+ self.n_layers = n_layers

+ self.kernel_size = kernel_size

+ self.p_dropout = p_dropout

+ self.window_size = window_size

+

+ self.drop = torch.nn.Dropout(p_dropout)

+ self.attn_layers = torch.nn.ModuleList()

+ self.norm_layers_1 = torch.nn.ModuleList()

+ self.ffn_layers = torch.nn.ModuleList()

+ self.norm_layers_2 = torch.nn.ModuleList()

+ for _ in range(self.n_layers):

+ self.attn_layers.append(MultiHeadAttention(hidden_channels, hidden_channels,

+ n_heads, window_size=window_size, p_dropout=p_dropout))

+ self.norm_layers_1.append(LayerNorm(hidden_channels))

+ self.ffn_layers.append(FFN(hidden_channels, hidden_channels,

+ filter_channels, kernel_size, p_dropout=p_dropout))

+ self.norm_layers_2.append(LayerNorm(hidden_channels))

+

+ def forward(self, x, x_mask):

+ attn_mask = x_mask.unsqueeze(2) * x_mask.unsqueeze(-1)

+ for i in range(self.n_layers):

+ x = x * x_mask

+ y = self.attn_layers[i](x, x, attn_mask)

+ y = self.drop(y)

+ x = self.norm_layers_1[i](x + y)

+ y = self.ffn_layers[i](x, x_mask)

+ y = self.drop(y)

+ x = self.norm_layers_2[i](x + y)

+ x = x * x_mask

+ return x

+

+

+class TextEncoder(BaseModule):

+ def __init__(self, n_vecs, n_mels, n_embs,

+ n_channels,

+ filter_channels,

+ n_heads=2,

+ n_layers=6,

+ kernel_size=3,

+ p_dropout=0.1,

+ window_size=4):

+ super(TextEncoder, self).__init__()

+ self.n_vecs = n_vecs

+ self.n_mels = n_mels

+ self.n_embs = n_embs

+ self.n_channels = n_channels

+ self.filter_channels = filter_channels

+ self.n_heads = n_heads

+ self.n_layers = n_layers

+ self.kernel_size = kernel_size

+ self.p_dropout = p_dropout

+ self.window_size = window_size

+

+ self.prenet = ConvReluNorm(n_vecs,

+ n_channels,

+ n_channels,

+ kernel_size=5,

+ n_layers=3,

+ p_dropout=0.5)

+

+ self.encoder = Encoder(n_channels + n_embs + n_embs,

+ filter_channels,

+ n_heads,

+ n_layers,

+ kernel_size,

+ p_dropout,

+ window_size=window_size)

+

+ self.proj_m = torch.nn.Conv1d(n_channels + n_embs + n_embs, n_mels, 1)

+

+ def forward(self, x_lengths, x, pit, spk):

+ x_mask = torch.unsqueeze(sequence_mask(x_lengths, x.size(2)), 1).to(x.dtype)

+ # despeaker

+ x = self.prenet(x, x_mask)

+ # pitch + speaker

+ spk = spk.unsqueeze(-1).repeat(1, 1, x.shape[-1])

+ x = torch.cat([x, pit], dim=1)

+ x = torch.cat([x, spk], dim=1)

+ x = self.encoder(x, x_mask)

+ mu = self.proj_m(x) * x_mask

+ return mu, x_mask

diff --git a/grad/model.py b/grad/model.py

new file mode 100644

index 0000000..9ce962e

--- /dev/null

+++ b/grad/model.py

@@ -0,0 +1,125 @@

+import math

+import torch

+

+from grad.base import BaseModule

+from grad.encoder import TextEncoder

+from grad.diffusion import Diffusion

+from grad.utils import f0_to_coarse, rand_ids_segments, slice_segments

+

+

+class GradTTS(BaseModule):

+ def __init__(self, n_mels, n_vecs, n_pits, n_spks, n_embs,

+ n_enc_channels, filter_channels,

+ dec_dim, beta_min, beta_max, pe_scale):

+ super(GradTTS, self).__init__()

+ # common

+ self.n_mels = n_mels

+ self.n_vecs = n_vecs

+ self.n_spks = n_spks

+ self.n_embs = n_embs

+ # encoder

+ self.n_enc_channels = n_enc_channels

+ self.filter_channels = filter_channels

+ # decoder

+ self.dec_dim = dec_dim

+ self.beta_min = beta_min

+ self.beta_max = beta_max

+ self.pe_scale = pe_scale

+

+ self.pit_emb = torch.nn.Embedding(n_pits, n_embs)

+ self.spk_emb = torch.nn.Linear(n_spks, n_embs)

+ self.encoder = TextEncoder(n_vecs,

+ n_mels,

+ n_embs,

+ n_enc_channels,

+ filter_channels)

+ self.decoder = Diffusion(n_mels, dec_dim, n_embs, beta_min, beta_max, pe_scale)

+

+ @torch.no_grad()

+ def forward(self, lengths, vec, pit, spk, n_timesteps, temperature=1.0, stoc=False):

+ """

+ Generates mel-spectrogram from vec. Returns:

+ 1. encoder outputs

+ 2. decoder outputs

+

+ Args:

+ lengths (torch.Tensor): lengths of texts in batch.

+ vec (torch.Tensor): batch of speech vec

+ pit (torch.Tensor): batch of speech pit

+ spk (torch.Tensor): batch of speaker

+

+ n_timesteps (int): number of steps to use for reverse diffusion in decoder.

+ temperature (float, optional): controls variance of terminal distribution.

+ stoc (bool, optional): flag that adds stochastic term to the decoder sampler.

+ Usually, does not provide synthesis improvements.

+ """

+ lengths, vec, pit, spk = self.relocate_input([lengths, vec, pit, spk])

+

+ # Get pitch embedding

+ pit = self.pit_emb(f0_to_coarse(pit))

+

+ # Get speaker embedding

+ spk = self.spk_emb(spk)

+

+ # Transpose

+ vec = torch.transpose(vec, 1, -1)

+ pit = torch.transpose(pit, 1, -1)

+

+ # Get encoder_outputs `mu_x`

+ mu_x, mask_x = self.encoder(lengths, vec, pit, spk)

+ encoder_outputs = mu_x

+